Your AI medical system could be harming patients right now. Healthcare institutions are fast-tracking clinical AI tools, often ignoring the critical defects lurking within the code.

As AI healthcare spending explodes to $45 billion by 2025, facilities are making the same devastating AI healthcare mistakes, leading to incorrect diagnoses and compromised patient care.

Biased algorithms, massive data breaches—these aren't just scare stories; they are active threats unfolding now.

We're unveiling 10 unspoken dangers, the AI implementation errors that vendors omit, which are already breeding malpractice cases and risking patient lives. Their silence could be deadly.

🚑 Top 10 AI Healthcare Mistakes to Avoid in 2026

| AI Healthcare Pitfall | Why It Matters |

|---|---|

| 🔍 Poor Data Quality Compromises Patient Outcomes | AI systems trained on flawed medical data lead to dangerous clinical decisions and wasted resources |

| ⚖️ Regulatory Compliance Failures | Ignoring FDA/MHRA frameworks creates legal liability and patient safety risks |

| 🚫 Hidden Algorithmic Bias in Medical AI | Biased algorithms perform 35% worse for certain demographics, widening healthcare disparities |

| 🤖 Clinical Judgment Replaced by AI Dependency | Automation bias leads doctors to accept AI recommendations without critical evaluation |

| 🔄 EHR Integration Failures | System silos create duplicate work and prevent critical information sharing between AI tools |

| 📈 Outdated AI Models in Clinical Practice | Failing to update AI with new medical research leads to declining performance |

| 👩⚕️ Staff Resistance to Healthcare AI Tools | Implementations without proper training see adoption rates below 30% |

| 💫 Hyped AI Capabilities vs. Medical Reality | Inflated expectations lead to abandoned AI projects and wasted healthcare investments |

| ⚠️ Unclear AI Malpractice Liability | Confusion about responsibility when AI recommendations harm patients |

| 🔒 Patient Data Security Breaches | Healthcare records sell for £250 each on dark web, making AI systems prime targets |

1. Data Quality Ignorance

AI systems are only as good as the data they're trained on. Using outdated, incomplete, or inaccurate medical data is like trying to diagnose patients with outdated medical knowledge – it simply doesn't work.

Many healthcare organisations rush to implement AI without properly vetting their data sources or establishing data governance protocols. The result? AI outputs that are unreliable or potentially harmful to patients.

How to avoid this mistake:

2. Failure to Adhere to Regulatory Requirements

Healthcare is one of the most heavily regulated industries, and AI tools must comply with frameworks established by bodies like the FDA in the US or the MHRA in the UK. Bypassing these regulatory requirements not only exposes organisations to legal consequences but puts patient safety at risk.

Many facilities implement AI solutions without understanding the regulatory landscape or securing proper approvals, leading to potential compliance issues down the road.

How to avoid this mistake:

3. Algorithmic Bias and Fairness Issues

AI systems trained on biased data can perpetuate or even amplify healthcare disparities. A 2024 study found that some diagnostic algorithms performed 35% worse for certain demographic groups simply because they were underrepresented in training data.

When AI makes biased recommendations, it creates unequal care standards that disproportionately affect vulnerable populations. This undermines the trust patients place in healthcare providers and can lead to poorer outcomes for affected groups.

How to avoid this mistake:

4. Over-reliance on AI Recommendations

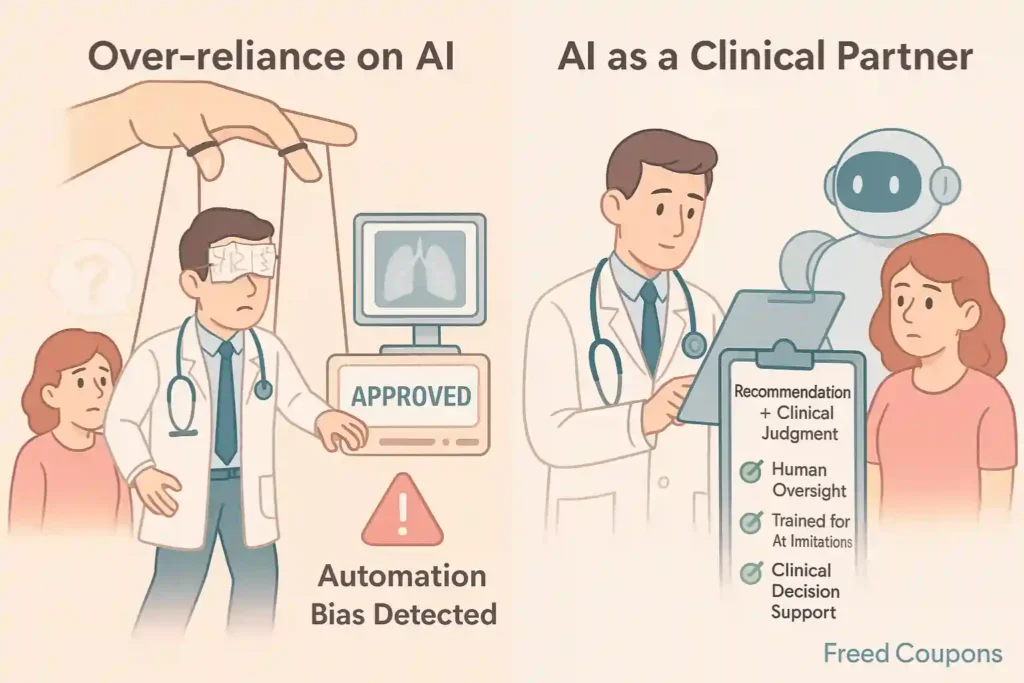

Despite impressive capabilities, AI should complement, not replace, clinical judgment. The danger comes when healthcare providers begin to trust AI recommendations without critical evaluation – what experts call “automation bias.”

AI lacks the contextual understanding, empathy, and nuanced judgment that comes from years of clinical experience. Trusting it blindly can lead to missed diagnoses or inappropriate treatments.

How to avoid this mistake:

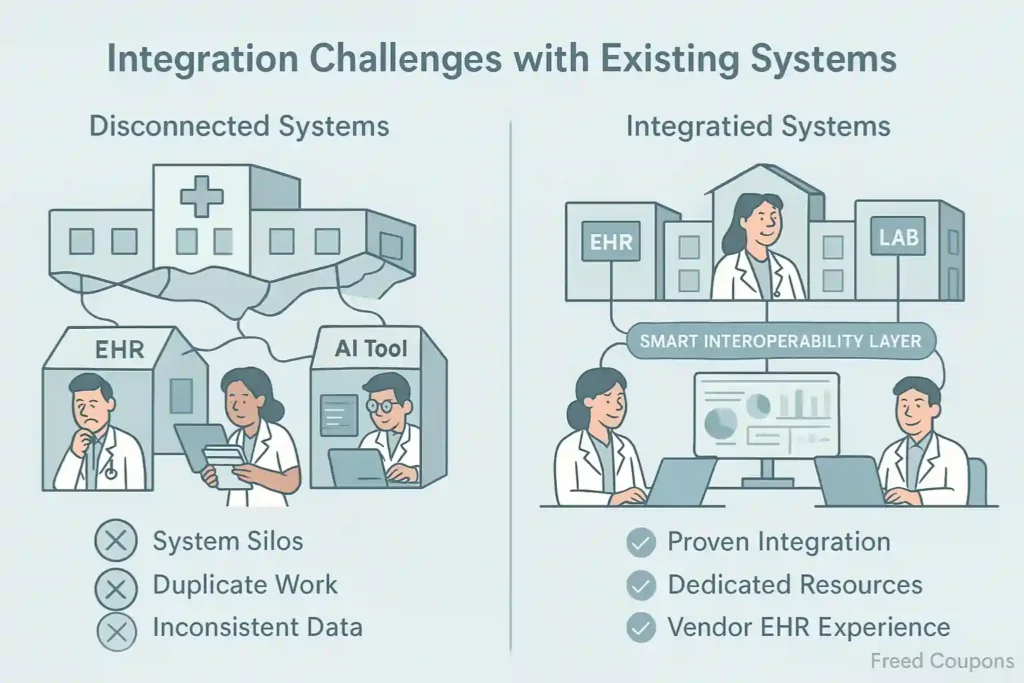

5. Integration Challenges with Existing Systems

Healthcare organisations often operate dozens of legacy systems that don't communicate well with each other. Adding AI without considering interoperability creates what IT professionals call “system silos” – isolated tools that can't share critical information.

Failed integration means duplicate work, inconsistent data, and frustrated staff who must navigate multiple systems to complete simple tasks.

How to avoid this mistake:

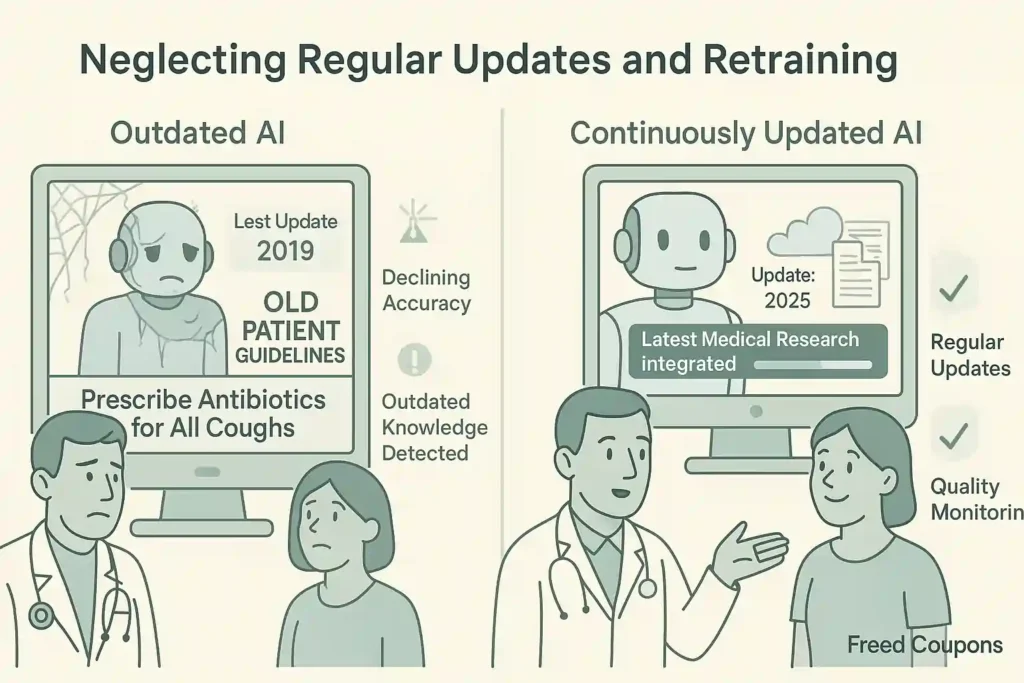

6. Neglecting Regular Updates and Retraining

Healthcare knowledge evolves rapidly, with thousands of new research papers published monthly. AI systems need regular updates to incorporate new medical knowledge and adapt to changing practice patterns.

Many organisations implement AI as a “set-and-forget” solution, failing to budget for ongoing maintenance. This leads to declining performance and potentially outdated recommendations.

How to avoid this mistake:

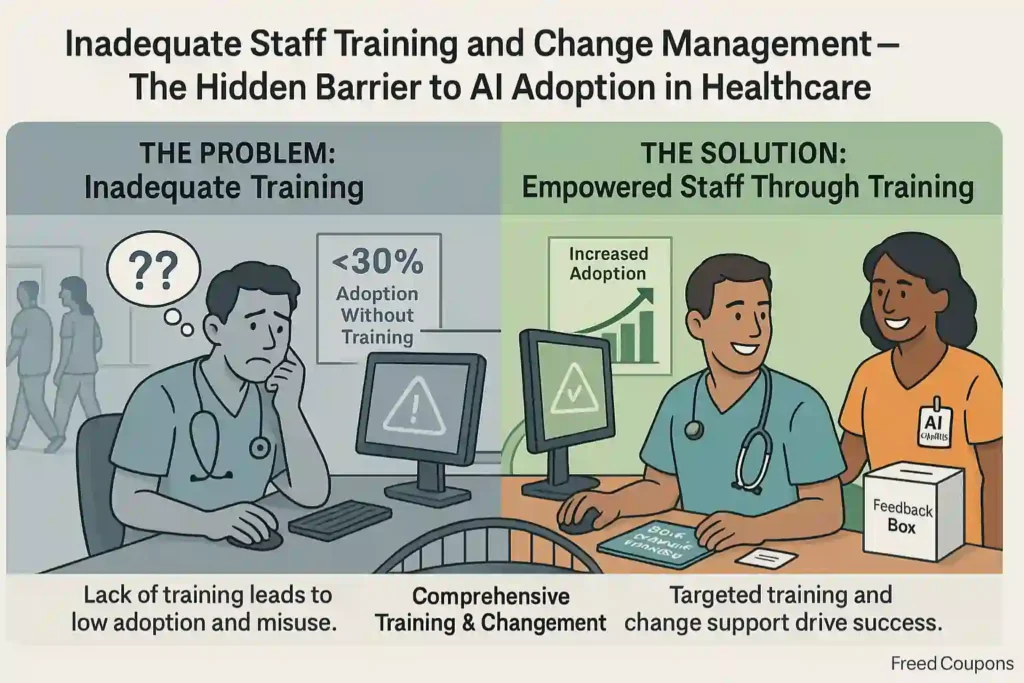

7. Inadequate Staff Training and Change Management

Even the best AI tools fail when staff don't understand how to use them properly. Research shows that healthcare AI implementations without robust training programs have adoption rates below 30%.

When clinicians receive insufficient training, they either avoid using AI tools or use them incorrectly, undermining potential benefits and creating new risks.

How to avoid this mistake:

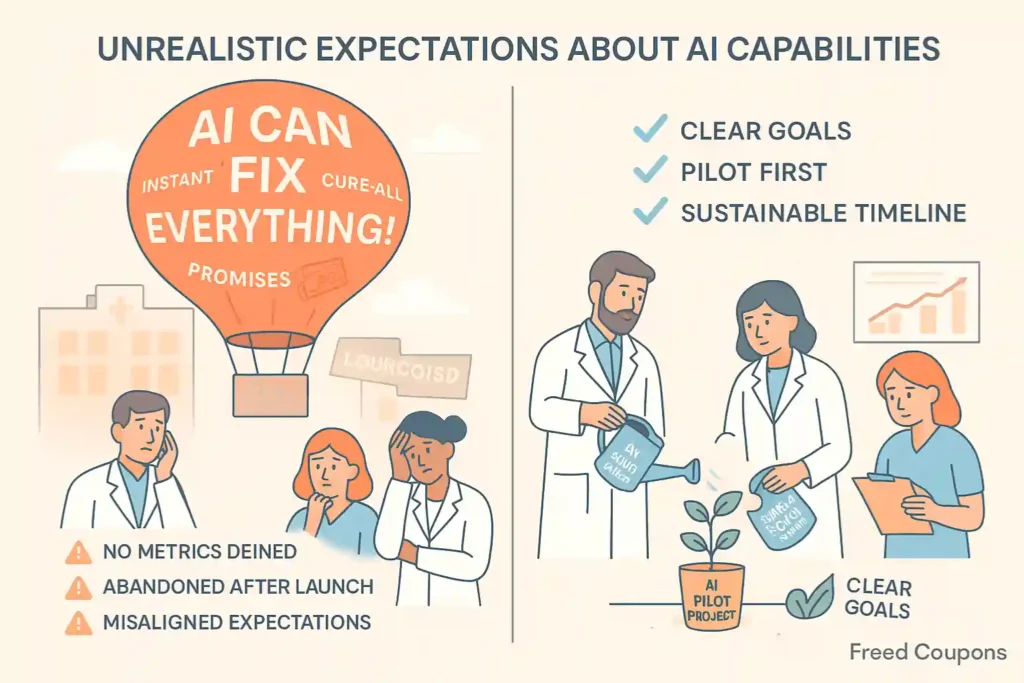

8. Unrealistic Expectations About AI Capabilities

Marketing hype often creates unrealistic expectations about what AI can actually deliver. When reality falls short of these inflated promises, organisations become disillusioned and may abandon potentially beneficial technologies.

Healthcare leaders sometimes expect AI to solve complex systemic problems overnight. When it doesn't, the technology gets blamed rather than the implementation approach.

How to avoid this mistake:

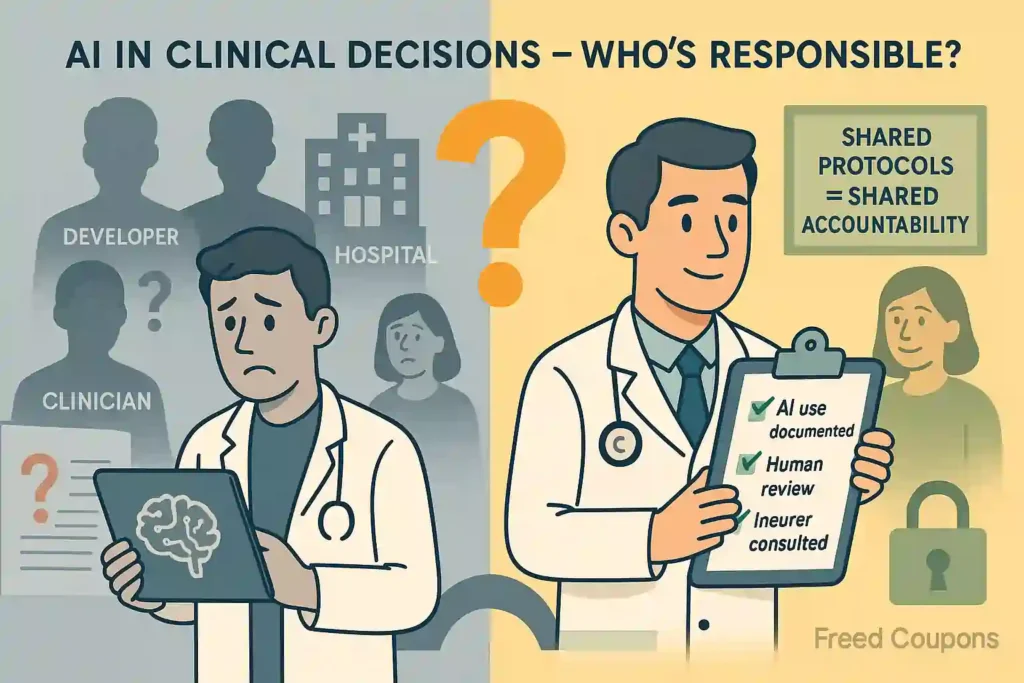

9. Professional Liability Uncertainties

As AI takes on more clinical decision-making, questions of liability become increasingly complex. Who's responsible when AI recommendations lead to harm? The developer? The healthcare provider? The clinician who followed the recommendation?

Without clear liability frameworks, healthcare organisations face uncertainty about their exposure to malpractice claims involving AI-assisted decisions.

How to avoid this mistake:

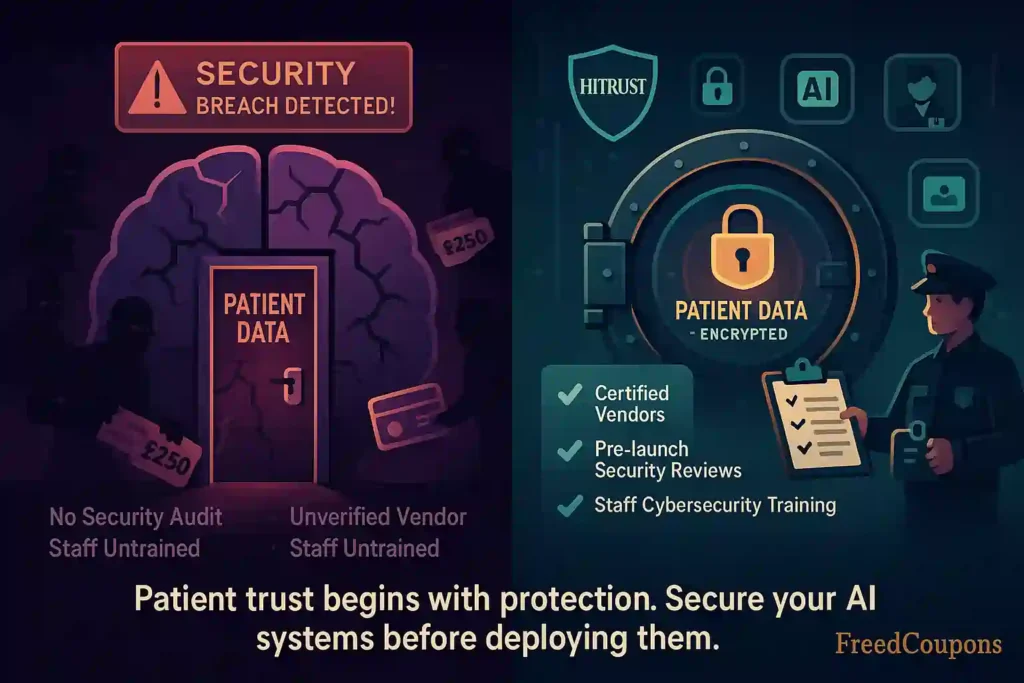

10. Data Privacy and Security Vulnerabilities

Healthcare data is among the most valuable targets for cybercriminals, selling for up to £250 per record on dark web marketplaces. AI systems that process patient data must maintain rigorous security standards.

Many organisations implement AI without sufficient security protocols, creating new vulnerabilities in their digital infrastructure.

How to avoid this mistake:

How AI Medical Scribes Are Addressing These Challenges

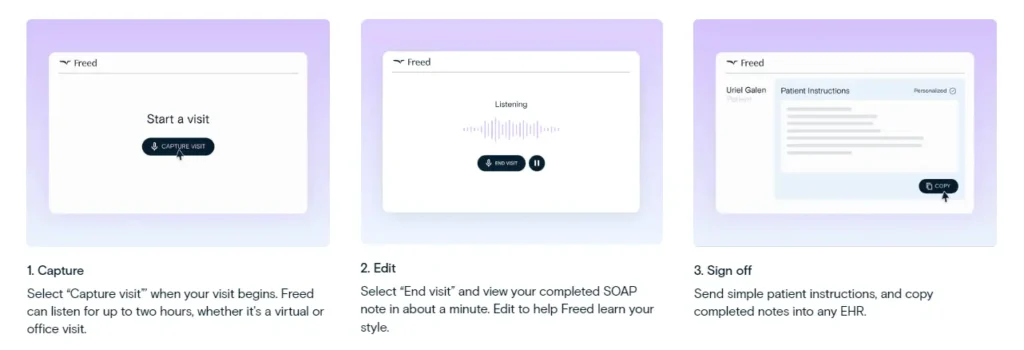

Solutions like Freed AI are addressing many of these challenges by providing AI medical scribe services that support healthcare providers while avoiding common pitfalls. These tools listen to patient-provider conversations and automatically generate clinical documentation, saving clinicians an average of 2 hours daily.

Freed AI has grown tremendously since launching in 2023, expanding to over 17,000 paying customers across 96 specialities. Their approach specifically addresses several key challenges:

The system works through a simple process: clinicians activate the AI during patient visits, and when the conversation ends, Freed AI generates structured clinical documentation that physicians can review, edit, and incorporate into their EHR systems.

The Hard Truth About AI in Healthcare

Your medical facility stands at a crossroads. Addressing these critical mistakes separates healthcare winners from costly failures. Tools like AI medical scribes show what's possible when done right—but rushing forward blindly guarantees wasted millions and patient harm.

Success isn't about replacing doctors with algorithms. It's about finding the perfect balance between clinical judgment and machine capability. The gap between AI success and failure isn't technical—it's strategic.